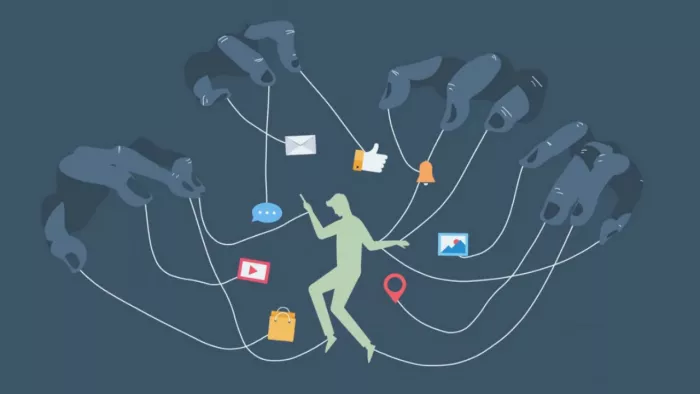

Most of us wake up, scroll through our phones, and trust that what we see online is somehow a reflection of reality. But behind the posts, videos, and articles that appear on our screens lies an invisible hand: algorithms. These are not just codes and calculations, they are systems designed to predict what we want, what we’ll watch, and ultimately, what we’ll believe.

The tricky part? They don’t just reflect our choices; they shape them. And often, without us even realizing it.

Information Overload to Algorithmic Filtering

In the early days of the internet, content was chaotic, but equal blogs, news sites, and forums competed for attention without much order. Then came algorithms, stepping in to “help” us filter through the noise.

Facebook’s News Feed, Google’s search ranking, and YouTube’s recommendation engine all promised to show us what mattered most. But in practice, they began prioritizing what kept us engaged.

A click on a sensational headline, a few extra seconds spent on a video, or repeated searches around a single viewpoint, these actions became signals. Over time, the algorithm learned, “This is what you want,” and started giving us more of the same.

The Echo Chamber Effect

The result? Echo chambers.

When an algorithm feeds us similar content repeatedly, it strengthens our beliefs, making us feel like everyone else must think the same way. For instance, someone who watches one conspiracy video might soon find their YouTube homepage flooded with more of them. Similarly, a person following a few political accounts might begin seeing only one side of the story.

This isn’t accidental, it’s optimization. Algorithms are designed to keep us scrolling, not to present a balanced view of the world. And while that works wonders for user engagement, it can quietly distort how we see reality.

Why We Trust What We See Online

Psychologists have long studied how repetition shapes belief. The “illusory truth effect” shows that when we see the same claim multiple times, we’re more likely to believe it even if it’s false. Algorithms amplify this effect by making sure we encounter familiar narratives again and again.

Add social proof into the mix, likes, shares, and comments, and it becomes even more persuasive. If thousands of people endorse something, how wrong can it be? This mix of repetition and social validation creates a powerful pull, nudging us toward accepting curated versions of reality.

Algorithms Are Not Neutral

It’s tempting to think algorithms are objective, but they’re not. They’re designed with goals: maximize engagement, increase ad revenue, or boost watch time. And with those goals, certain types of content thrive on emotional, controversial, or divisive material tends to perform better than calm, balanced reporting.

A headline like “You Won’t Believe What Happened Next” doesn’t rise to the top by accident. It rises because the algorithm knows it keeps people clicking.

When Beliefs Become Identities

Once beliefs are shaped by what we see, they begin to define who we are. This is where algorithms become more than just tools, they start influencing identities. Being part of a group online often feels like belonging to a community. But it can also mean we become resistant to opposing views, dismissing them as biased or uninformed.

The danger lies in polarization. Studies have shown that algorithm-driven platforms can push people further apart, creating divides that feel personal and unbridgeable.

Can We Outsmart the System?

The truth is, we can’t completely escape algorithms. They’re embedded in almost everything we use online. But we can become more mindful of how they work and how they affect us.

- Diversify your sources: Don’t rely on one platform for all your information.

- Pause before sharing: Ask yourself if the headline is designed to spark outrage rather than inform.

- Seek disconfirmation: Look for content that challenges your views, it’s uncomfortable but healthy.

- Understand the motive: Remember, platforms are businesses. Their priority is your attention, not your clarity.

Awareness as Power

Algorithms may feel invisible, but their impact is everywhere, from how we shop to the news we read to the opinions we form. The question is not whether they shape what we believe they do. The real question is whether we allow them to define our reality without question.

By approaching what we see online with curiosity rather than blind acceptance, we can reclaim a measure of control. After all, belief should be a choice, not just the outcome of a recommendation engine.

Comments