Computer-assisted media, such as image manipulation and CGI, have existed since the 1990s. While machines did much of the heavy lifting, humans directed these tools and retained complete creative control.

On the other hand, synthetic media refers to content generated wholly or with the assistance of some technology we colloquially refer to as artificial intelligence. These include large language and diffusion models, text-to-speech, music, story generators, 3D model generators, and more.

On paper, synthetic media is a neutral technological development with countless potentially positive applications. The ability to remove tricky elements from photos with generative fill or create a complex infographic in minutes based on a few prompts comes to mind first. Sadly, synthetic media is also being used to create a deluge of increasingly indistinguishable and damaging deepfakes.

This article will help you familiarize yourself with synthetic media's past and the emerging trends shaping its future. Most importantly, you'll also discover the steps you can take now to remain informed of and protected from synthetic media's harmful aspects.

A Brief History of Synthetic Media

Synthetic media made its tentative first steps in the 50s and 60s, when composers and computer researchers conducted early experiments in pattern recognition and computer-generated music based on these results.

The field advanced slowly and inconsistently until the early 2010s, when the dawn of Generative Adversarial Networks (GANs) occurred. These GANs consist of two neural networks, a generator and a discriminator, pitted against each other.

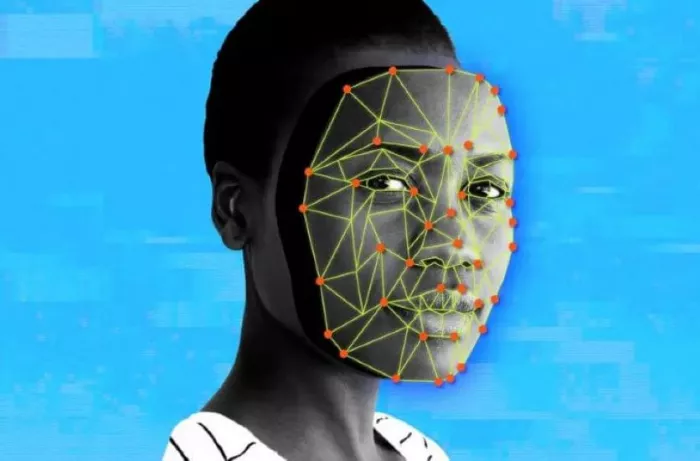

The discriminator takes inputs like real photos of people or places and compares them with the generator’s output. This creates a feedback loop where the generator becomes more adept at creating convincing images. Simultaneously, the discriminator can better discern between what’s real and fake. Over thousands or millions of iterations, this adversarial process becomes capable of producing synthetic images that humans find increasingly harder to tell apart from real ones.

Google revolutionized natural language processing through transformers. These led to the development and rapid evolution of large language models that could establish and keep track of relations and dependencies between words in larger bodies of text. Coupled with advances in the speed and scale with which datasets could be used for training, this eventually led to the refined LLMs and generative AI widely used today.

The Current Impact of Deepfakes on Society

It’s now possible to use a handful of existing real images and voice samples to create "photos", video, or audio of people. The rapid advancement, democratization, and simplification of AI tools have caused an exponential rise in deepfakes' scope and depravity.

Disgruntled lovers post fake sexually explicit content featuring their exes. Deepfakes of C-suite executives are replacing spear phishing and BEC (Business Email Compromise) as a successful means of defrauding millions of companies.

Such misuse of synthetic media isn’t just the purview of individual actors. Documented cases of state-sponsored deepfakes also exist. These sow misinformation or distrust and have become a powerful tool for swaying public opinion and influencing the democratic process.

Emerging Trends

The most recent developments suggest that synthetic media is on track to become both more realistic and hyper-individualized. For example, AI-generated videos look much smoother and more believable than they did nearly a year ago and have realistic sound integration.

Virtual influencers and “AI friends” are becoming more prevalent as well. They’re able to steer conversations or entire streams based on audience input. Moreover, their once static faces are taking on complex real-time expressions, reducing the uncanny valley effect even further. Magazines like Vogue are even putting pictures of synthetic models into their print releases, adding a new facet to the ongoing debate of increasingly unrealistic beauty standards.

What Can You Do to Stay Safe?

The line between real and synthetic media is already irretrievably blurry. Without taking action, the average person will become increasingly more susceptible to deepfakes and other exploitative synthetic media. So, what can you do?

A two-pronged approach works best. On the one hand, you’ll want to focus on recognizing deepfakes and avoiding becoming a victim. That includes approaching unfamiliar media with skepticism. Check reputable sources if it’s generally suspicious or ask for clarification and contact the real person if you suspect a deepfake scam.

On the other hand, it’s prudent to take measures that ensure there’s as little data as possible on you with which deepfakes could be created. Managing your digital footprint by posting less on social media and using a VPN are excellent proactive measures. VPNs can be particularly impactful since they encrypt your connection and hide information like your IP address and internet activity. This gives malicious actors fewer behavioral and location-based parameters, reducing the likelihood of creating believable deepfakes.

Since it's impossible to control every bit of information about you that eventually gets online, you'll want to supplement these with an effective reactive measure, like engaging identity protection services. They monitor the unsavory parts of the internet for mention of leaked information like your account credentials or personal details. Awareness of these leaks lets you take preventative or legal action earlier and can reduce the potential fallout if deepfakes are created.

Conclusion

While the advancement of AI has been groundbreaking, we need to be aware of its true nature. AI helps us be more efficient, reducing the time we usually spend hours to minutes. It helps us find creative ways to solve any problem we may face. Yet in the wrong hands, this pioneering technology can have profound implications for individuals and society.

That’s why it’s vital to learn these consequences and take necessary steps to protect ourselves from the misuse of AI and the dangers it may entail.

Comments